In the Editor, Unity sees both ARCore and ARKit as possible AR implementations and warns you that it sees two but will only be able to use one at runtime. On an Android or iOS device, there should only be one package present, so you shouldn't get this warning.

The project consists out of four big parts, namely the ARCore based localisation, the QR-code repositioning, the navigation (NavMesh), and lastly the AR view. I assume that readers who want to recreate this project already have a basic knowledge of Unity and know how to setup a Unity project with ARCore. This project is developed for Android. ARCore SDK for Unity. Contribute to google-ar/arcore-unity-sdk development by creating an account on GitHub. Known issues None. Breaking & behavioral changes Changing the camera direction in an active session now requires disabling and enabling the session to take effect. A Unity ID allows you to buy and/or subscribe to Unity products and services, shop in the Asset Store and participate in the Unity community. Master ARCore in Unity SDK - Build 6 Augmented Reality Apps Master ARCore in Unity SDK - Build 6 Augmented Reality Apps Watch Promo Enroll in Course for $199. Off original price! The coupon code you entered is expired or invalid, but the course is still available!

Prerequisites¶

- A walkthrough of Google ARCore Quickstart,

- An ARCore enabled Android device

- Unity 2017.4.9f1 or later with Android Build Support

- Note Unity 2018.2 has an known bug with Android render target, so it’s not supported. See bug tracking here.

- Android SDK 7.0 (API Level 24) or later

- Accept all the licenses from Android SDK. For example, if you are on macOS:

- $ cd ~/Library/Android/sdk/tools/bin

- $ ./sdkmanager –licenses

- Accept all the licenses.

Getting ARCore SDK for Unity¶

Download ARCore SDK for Unity 1.4.0 or later

Open Unity and create a new empty 3D project

Select Assets > Import Package > Custom Package, use the downloaded arcore-unity-sdk-v1.4.0.unitypackage from your disk.

In the import dialogue, make sure everything is selected and click Import.

Accept any API upgrades if prompted.

Importing 8i Unity Plugin¶

Within the newly created project, extract 8i Unity Plugin into the Asset folder, as stated in Quick Start section.

You should have the directory structure like this:

Fix any warning poped up in 8i Project Tips window, including Android Unpack Scene.

Configure the Unity Project¶

Open the project in Unity

If you are prompted to upgrade the Unity version, click yes.

Open scene HelloAR by double clicking Assets/GoogleARCore/Examples/HelloAR/Scenes/HelloAR

Select File > Build Settings, a build dialogue should come up. click Player Settings… button. A PlayerSettings inspector should appear. In the Inspector window, find Other Settings - Metal Editor Support and unchecked it. This is important for Unity previewing 8i’s hologram content.

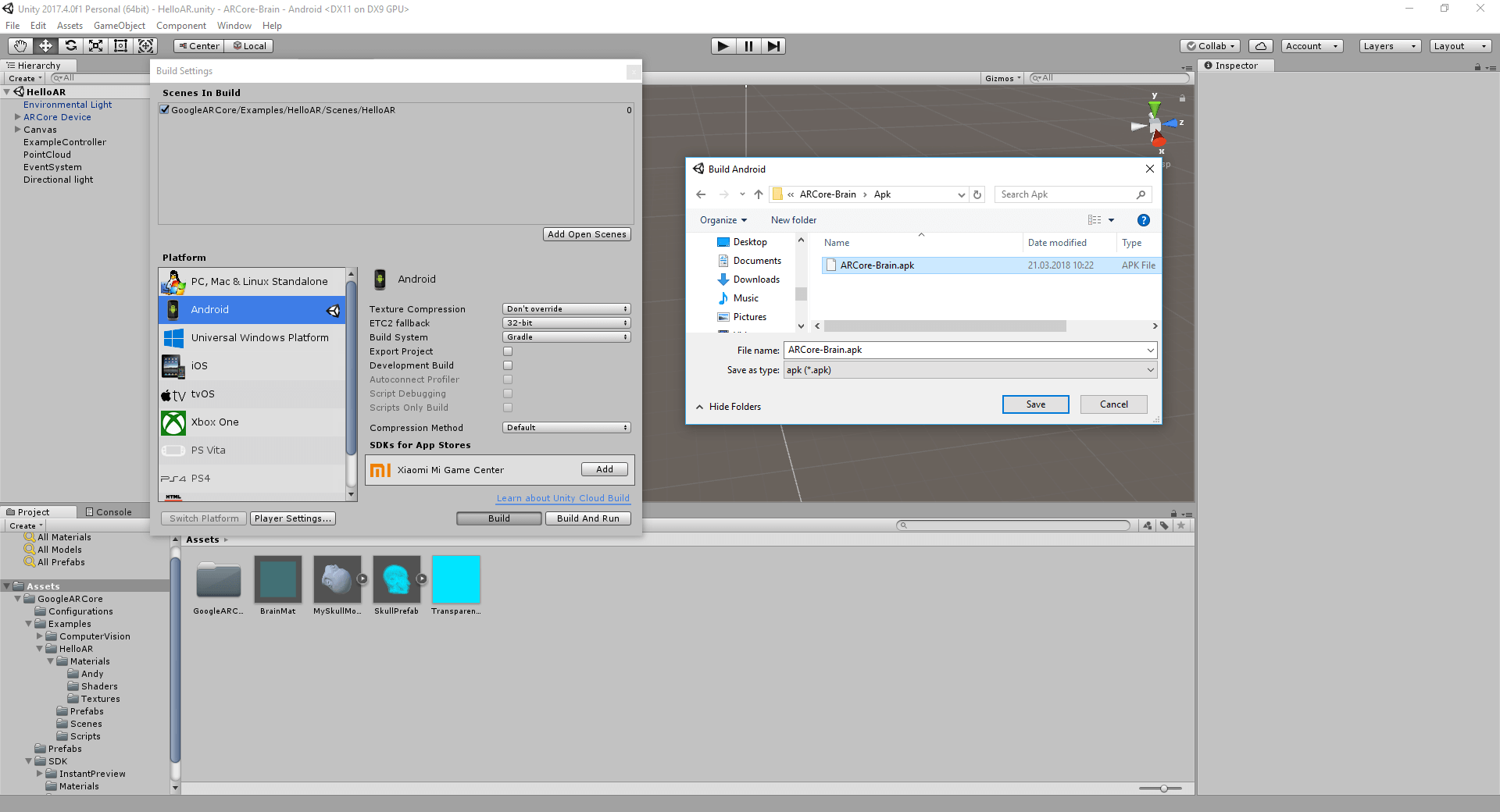

Still in Build Settings dialogue, in Platform choose Android and click Switch Platform button.

Make sure the Platform is switched to Android, and make sure HelloAR scene is ticked on by using Add Open Scenes.

Still in Build Settings dialogue, click Player Settings… button. A PlayerSettings inspector should appear. In the Inspector window, a few fields need to be configured:

Other Settings - Package Name: set to an reversed DNS like name, e.g. com.yourcompany.arsample

Other Settings - Uncheck Auto Graphics API and explicitly set OpenGL ES 3 as the graphics API

Other Settings - Multithreaded Rendering: uncheck

Other Settings - Minimal API Level: set to Android 7.0 or higher. Note you need to have the right version of Android SDK installed and configured in Unity > Preference.

Other Settings - Target API Level: set to Android 7.0 or higher. Note you need to have the right version of Android SDK installed and configured in Unity > Preference.

XR Settings - ARCore Supported: tick on

Your First 8i Hologram¶

For this tutorial, we will edit the HelloAR scene from Google ARCore SDK for Unity’s example.

W will change the original AR object to 8i’s hologram, so that you can place a human hologram onto the augmented world.

To open the scene, find the scene in project and double click the scene.

Select menu GameObject > 8i > HvrActor. This will create an GameObject with a HvrActor component attached to it.

Select the newly created HvrActor object.

There are a few options to note but for now we will just focus on the Asset/Data/Reference field.

This is the data source that 8i’s hologram engine will read from. As you can see, right now it’s empty. To specify a valid file reference, we can go to folder 8i/examples/assets/hvr, and find “president” folder:

Drag this “president” folder to Asset/Data/Reference field in Inspector panel. To make things even simpler, uncheck the Rendering/Lighting/Use Lighting checkbox:

You should be able to see the hologram has already been shown in the Scene view:

Making A Prefab¶

Because we want our user be able to drop the hologram whenever he touches the ground, we need to wrap this HvrActor object into a called “prefab” and let our ARKit code know to use it.

Making sure HvrActor is currently selected, drag the HvrActor object down to a folder in the Project window, Unity will automatically create a prefab for you, and you will see the name of HvrActor turns blue:

To change the HelloAR scene to spawn HvrActor instead of Andy Android, find Example Controller object in the scene and select it.Drag the newly created prefab HvrActor to Example Controller’s Inspector panel, replace Andy Plane Prefab and Andy Point Prefab with HvrActor:

Because we have stored the HvrActor in a prefab it is now safe to delete the HvrActor in the scene. Go to Hierarchy and right click on HvrActor, which should has its name in blue colour, and choose “Delete”.

Save the scene by pressing Cmd+S.

Camera Configuration¶

Next we need to configure the camera to let it render 8i’s hologram.

Note

This step is required or else you will only be able to view the hologram within the Unity Editor

Find the camera object in Hierarchy > CameraParent > Main Camera and select it.

With First Person Camera seleced, In menu choose Component > 8i > HvrRender, this should add a HvrRender component to the camera:

Save the scene by pressing Cmd+S.

Include HVR Data¶

Before we can build the project, there’s an extra step to do. Because we are using a prefab which means it will be dynamically loaded. We need to explicitly tell Unity to include the data before exporting.

First, right click on the Project window and create an asset of type HvrDataReference. You do it through Create > 8i > HvrDataReference.

After creation, select the asset. Drag the president folder to its data field.

Now we have created and configured the asset on disk. Now we need to include this asset in our scene. Right click in Hierarchy window and create an empty GameObject.

With the empty object selected, attach a component of type HvrDataBuildInclude. You can find it in Component > 8i > HvrDataBuildInclude.

Drag the configured HvrDataReference asset to Data Reference field.

Finally, choose from menu 8i > Android > Prepare Build and click OK if a dialogue prompts. This will prepare and bake the content ready to be submit to Android device. Note this is an Android specific process whenever you changed the dynamic loaded 8i content. You don’t have to do it if no 8i content changed between builds.

Save the scene.

Export and Build¶

That’s it! It’s time to build an APK and deploy it to the device.

Connect your Android phone to your development machine

Enable developer options and USB debugging on your Android phone. This should be done just once.

Menu File > Build Settings, click Player Settings.

Click Build And Run, select a folder to export the APK. If everything went smooth, you should be to see the APK get exported and automatically deployed to device.

Once the build is up and running, pick up your phone and walk around until a magenta ground is shown, which means you can put your holograms on.

Tap the white grid ground or blue dots to see how hologram works within AR world.

Where to go from now on¶

- Check out our documentation on all the Components and how they interact with each other.

- Take a look at Google Augmented Reality Design Guidelines.

- Download 8i holograms from https://8i.com/developers/downloads/.

ARCore is a platform for building augmented reality apps on Android. ARCore uses three key technologies to integrate virtual content with the real world as seen through your phone's camera:

- Motion tracking allows the phone to understand and track its position relative to the world.

- Environmental understanding allows the phone to detect the size and location of flat horizontal surfaces like the ground or a coffee table.

- Light estimation allows the phone to estimate the environment's current lighting conditions.

This codelab guides you through building a simple demo game to introduce these capabilities so you can use them in your own applications.

- Enabling ARCore through the Player settings

- Adding the ARCore SDK prefabs to the scene

- Scaling objects consistently to look reasonable with respect to the real world.

- Using Anchors to place objects at a fixed location relative to the real world.

- Using detected planes as the foundation of augmented reality objects

- Using touch and gaze input to interact with the ARCore scene

Unity Game Engine

- Recommended version: Unity 2017.4 LTS or later

- Minimum version: 2017.3.0f2

- JDK 8 (JDK 9 is currently not supported by Unity, use JDK 8 instead)

Other items

- Sample assets for the project (arcore-intro.unitypackage, hosted on GitHub)

- ARCore supported device and USB cable

More information about getting started can be found at: developers.google.com/ar/develop/unity/getting-started

Now that you have everything you need, let's start!

Create a new Unity 3D project and change the target platform to Android (under File > Build Settings).

Select Android and click Switch Platform.

Then click Player Settings... to configure the Android specific player settings.

- In Other Settings, disable Multithreaded rendering

- Set the Package name to a unique name e.g. com.<yourname>.arcodelab

- Set the Minimum API level to 7.0 (Nougat) API level 24 or higher

- In XR Settings section at the bottom of the list, enable ARCore Supported

Add the codelab assets

Import arcore-intro.unitypackage into your project. (If you haven't already done so, check the Overview step for a list of prerequisites you need to download). This contains prefabs and scripts that will expedite the parts of the codelab so you can focus on how to use ARCore.

- Delete the default Main Camera game object. We'll use the First Person Camera from the ARCore Device prefab instead.

- Delete the default Directional light game object.

- Add the Assets/GoogleARCore/Prefabs/ARCore Device prefab to the root of your scene. Make sure its position is set to (0,0,0).

- Add the Assets/GoogleARCore/Prefabs/Environmental Light prefab to the root of your scene.

- Add the built-in EventSystem (from the menu: GameObject>UI>EventSystem).

Now you have a scene setup for using ARCore. Next, let's add some code!

The scene controller is used to coordinate between ARCore and Unity. Create an empty game object and change the name to SceneController. Add a C# script component to the object also named SceneController.

AR Optional application, you can see more information on the Google Developer website.

Open the script. We need to check for a variety of error conditions. These conditions are also checked by the HelloARExample controller sample script in the SDK.

First add the using statement to resolve the class name from the ARCore SDK. This will make auto-complete recognize the ARCore classes and methods used.

SceneController.cs

Update() method. At the same time, adjust the screen timeout so it stays on if we are tracking.

Save the scene with the name 'ARScene' and add it to the list of scenes when building.

Build and run the sample app. If everything is working, you should be prompted for permission to take pictures and record video, after which you'll start seeing a preview of the camera image. Once you see the preview image, you're ready to use ARCore!

If there is an error, you'll want to resolve it before continuing with the codelab.

Unity's scaling system is designed so that when working with the Physics engine, 1 unit of distance can be thought of as 1 meter in the real world. ARCore is designed with this assumption in mind. We use this scaling system to scale virtual objects so they look reasonable in the real world.

For example, an object placed on a desktop should be small enough to be on the desktop. A reasonable starting point would be ½ foot (15.24 cm), so the scale should (0.1524, 0.1524, 0.1524).

This might not look the best in your application, but it tends to be a good starting point and then you can fine tune the scale further for your specific scene.

As a convenience, the prefabs used in this codelab contain a component named GlobalScalable which supports using stretch and pinch to size the objects. To enable this, the touch input needs to be captured.

Now when running the application, the user can pinch or stretch the objects to fit the scene more appropriately.

Next, let's detect and display the planes that are detected by ARCore.

ARCore uses a class named DetactedPlane to represent detected planes. This class is not a game object, so we need to make a prefab that will render the detected planes. Good news since ARCore 1.2 there's already such a prefab in the ARCore Unity SDK, it is Assets/GoogleARCore/Examples/Common/Prefabs/DetectedPlaneVisualizer.

ARCore detects horizontal and vertical planes. We'll use these planes in the game. For each newly detected plane, we'll create a game object that renders the plane using the DetectedPlaneVisualizer prefab. You may have guessed it, since ARCore 1.2 there's a convenient script in ARCore Unity SDK, Assets/GoogleArCore/Examples/Common/Scripts/DetectedPlaneGenerator.cs that just does this.

DetectedPlaneGenerator onto SceneController object. Select SceneController object, in property Inspector, click Add Component button, and type-in DetectedPlaneGenerator. Then set the value of Detected Plane Prefab to the prefab Assets/GoogleARCore/Examples/Common/Prefabs/DetectedPlaneVisualizer.

In SceneController script, create a new method named ProcessTouches(). This method will perform the ray casting hit test and select the plane that is tapped.

SceneController.cs

The last step is to call ProcessTouches() from Update. Add this code to the end of the Update() method:

firstPersonCamera to the first person camera object in the scene editor!

In ARCore, objects that maintain a constant position as you move around are positioned by using an Anchor. Let's create an Anchor to hold a floating scoreboard.

Write the Scoreboard controller script

In order to position the scoreboard, we'll need to know where the user is looking. So we'll add a public variable for the first person camera.

The scoreboard will also be 'anchored' to the ARScene. An anchor is an object that holds it position and rotation as ARCore processes the sensor and camera data to build the model of the world.

To keep the anchor consistent with the plane, we'll keep track of the plane and make sure the distance in the Y axis is constant.

Also add a member to keep track of the score.

using GoogleARCore; to script to resolve the Anchor class!

Just as in the previous step, save the script, switch to the scene editor, and set 'First Person Camera' property to ARCore Device/First Person Camera from the scene hierarchy.

We'll place the scoreboard above the selected plane. This way it will be visible and indicate which plane we're focused on.

In ScoreboardController script, in the Start() method, add the code to disable the mesh renderers.

Create the function SetSelectedPlane()

This is called from the scene controller when the user taps a plane. When this happens, we'll create the anchor for the scoreboard

Create the function CreateAnchor()

The CreateAnchor method does 5 things:

- Raycast a screen point through the first person camera to find a position to place the scoreboard.

- Create an ARCore Anchor at that position. This anchor will move as ARCore builds a model of the real world in order to keep it in the same location relative to the ARCore device.

- Attach the scoreboard prefab to the anchor as a child object so it is displayed correctly.

- Record the yOffset from the plane. This will be used to keep the score the same height relative to the plane as the plane position is refined.

- Enable the renderers so the scoreboard is drawn.

Add code to ScoreboardController.Update()

First check for tracking to be active.

ScoreboardController.cs

The last thing to add is to rotate the scoreboard towards the user as they move around in the real world and adjust the offset relative to the plane.

Call SetSelectedPlane() from the scene controller

Switch back to the SceneController script and add a member variable for the ScoreboardController.

SceneController.cs

Save the scripts and the scene. Build and run the app! Now it should display planes as they are detected, and if you tap one, you'll see the scoreboard!

Now that we have a plane, let's put a snake on it and move it around on the plane.

Unity Arcore Tutorial

Create a new Empty Game object named Snake.

Add the existing C# script (Assets/Codelab/Scripts/Slithering.cs). This controls the movement of the snake as it grows. In the interest of time, we'll just add it, but feel free to review the code later on.

Add a new C# script to the Snake named SnakeController.

In SnakeController.cs, we need to track the plane that the snake is traveling on. We'll also add member variables for the prefab for the head, and the instance:

using GoogleARCore; to script to resolve the DetectedPlane class!

Create the SetPlane() method

In SnakeController script, add a method to set the plane. When the plane is set, spawn a new snake.

SnakeController.cs

Now add a member variable to the SceneController.cs to reference the Snake.

SceneController.cs

To move the snake, we'll use where we are looking as a point that the snake should move towards. To do this, we'll raycast the center of the screen through the ARCore session to a point on a plane.

First let's add a game object that we'll use to visualize where the user is looking at.

Edit the SnakeController and add member variables for the pointer and the first person camera. Also add a speed member variable.

Set the game object properties

Save the script and switch to the scene editor.

Add an instance of the Assets/CodelabPrefabs/gazePointer to the scene.

Arcore Unity Github

Then, select Snake object, in Inspector view, set the pointer property to the instance of the gazePointer, and the firstPersonCamera to the ARCore device's first person camera.

SnakeController.cs

SnakeController.Update().

Move towards the pointer

Once the snake is heading in the right direction, move towards it. We want to stop before the snake is at the same spot to avoid a weird nose spin. Add below code to the end of SnakeController.Update().

using GoogleARCore; to script to resolve the DetectedPlane class!

Add the food tag

Add a tag in the editor by dropping down the tag selector in the object inspector, and select 'Add Tag'. Add a tag named 'food'. We'll use this tag to identify food objects during collision detection.

Important: Remember to add the tag using the tag manager! If you don't the food won't be recognized and won't be eaten!

FoodController.cs

In the FoodController.Update() method:

- Check for a null plane; if we don't have a plane we can't do anything.

- Check for a valid detectedPlane. Again, if the plane is not tracking, do nothing.

Arcore Development

FoodController.cs

- Lastly, increase the age of existing food and destroy it if expired:

Arcore Unity 2020

FoodController.cs

SetSelectedPlane()in the SceneController.SetSelectedPlane():

It to the instance when we spawn.

In SnakeController.SpawnSnake(), add the component to the new instance.

FoodConsumer.cs

Remember that Scoreboard from the beginning of the codelab? Well, now it is time to actually use it!

In SceneController.Update(), set the score to the length of the snake:

ScoreboardController.cs

Add GetLength() in the SnakeController

Other Resources

As you continue your ARCore exploration. Check out these other resources:

- AR Concepts: https://developers.google.com/ar/discover/concepts

- Google Developers ARCore https://developers.google.com/ar/

- Github projects for ARCore: https://github.com/google-ar

- AR experiments for inspiration and to see what could be possible: https://experiments.withgoogle.com/ar